Generative human body models are powerful tools for estimating 3D human bodies from partial observations. Our group has proposed several novel methods in the rising research area of learning neural implicit body models. Our model, LEAP (Learning articulated occupancy of people), is the first articulated neural implicit representation that generalizes to diverse body shapes. The recent work, COAP(compositional articulated occupancy of people), learns part-based body representations that are efficient and generalizable to out-of-distribution poses. For human hand and hand-object interaction modeling, we proposed expressive implicit presentations (e.g., GraspingField). Our Approach can synthesize realistic human grasps given 3D objects and received the Best Paper Award at the International Conference of 3D Vision 2020.

Publications

Authors:Marko Mihajlovic , Shunsuke Saito , Aayush Bansal , Michael Zollhoefer and Siyu Tang

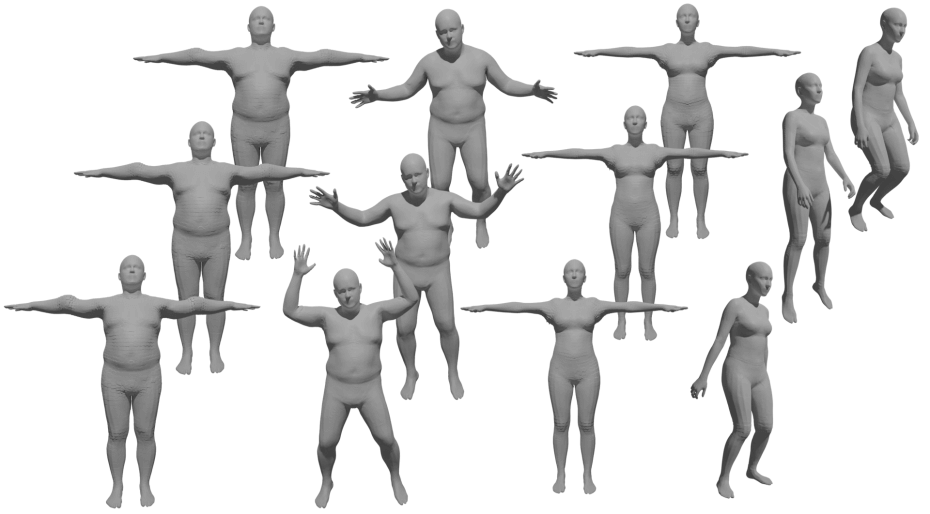

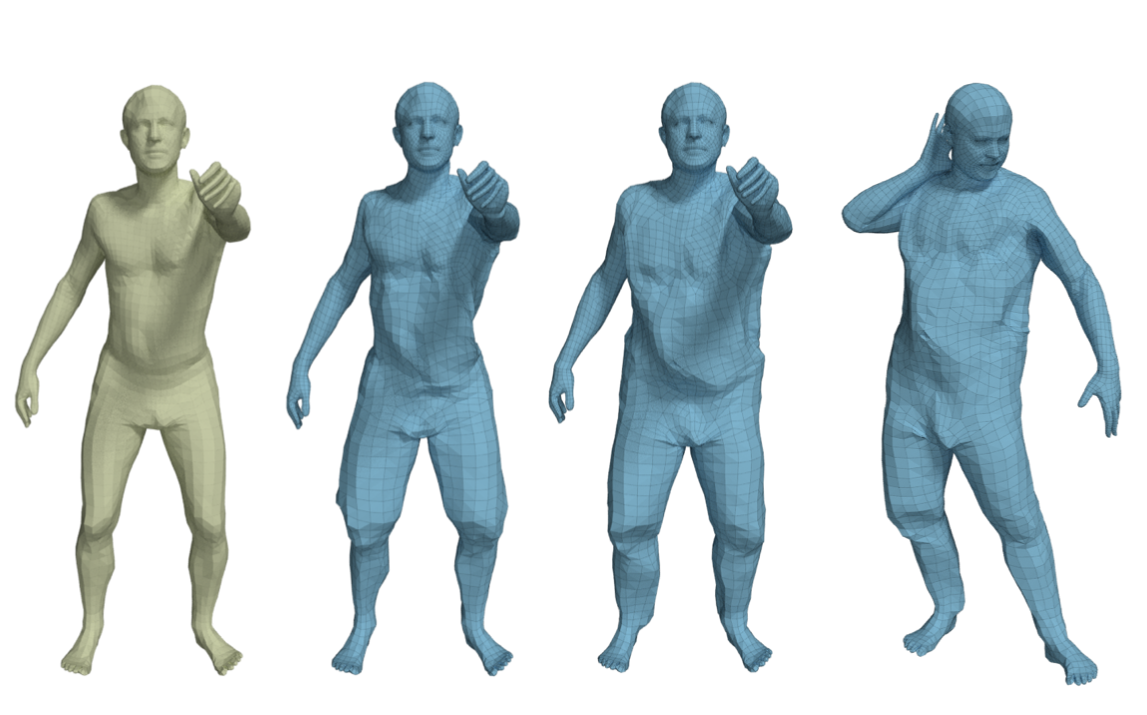

COAP is a novel neural implicit representation for articulated human bodies that provides an efficient mechanism for modeling self-contacts and interactions with 3D environments.Authors:Korrawe Karunratanakul, Adrian Spurr, Zicong Fan, Otmar Hilliges, Siyu Tang

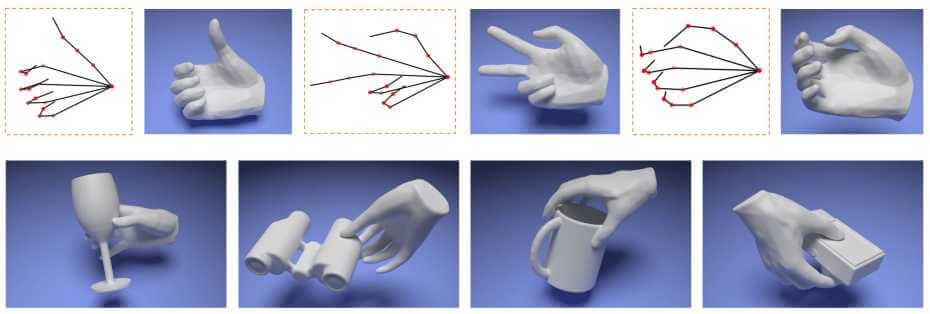

We present HALO, a neural occupancy representation for articulated hands that produce implicit hand surfaces from input skeletons in a differentiable manner.Authors:Qianli Ma, Jinlong Yang, Siyu Tang and Michael J. Black

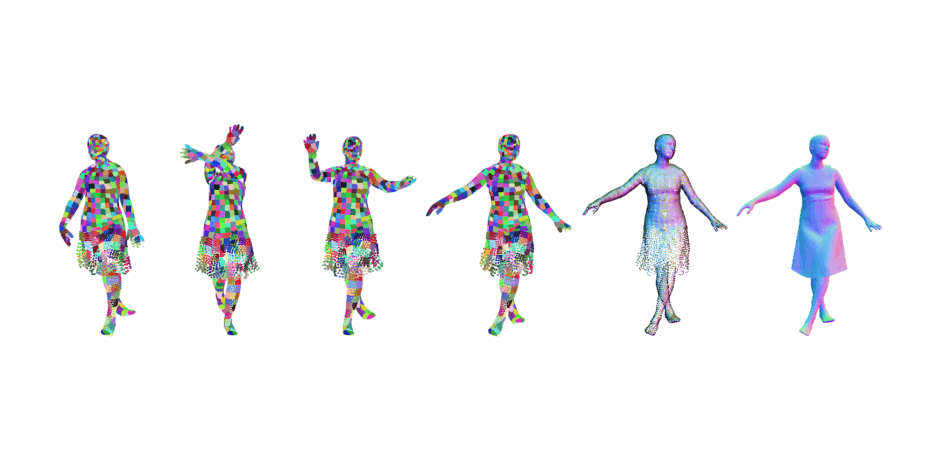

We introduce POP — a point-based, unified model for multiple subjects and outfits that can turn a single, static 3D scan into an animatable avatar with natural pose-dependent clothing deformations.Authors:Marko Mihajlovic, Yan Zhang, Michael J. Black and Siyu Tang

LEAP is a neural network architecture for representing volumetric animatable human bodies. It follows traditional human body modeling techniques and leverages a statistical human prior to generalize to unseen humans.Authors:Qianli Ma, Shunsuke Saito, Jinlong Yang, Siyu Tang and Michael J. Black

SCALE models 3D clothed humans with hundreds of articulated surface elements, resulting in avatars with realistic clothing that deforms naturally even in the presence of topological change.Grasping Field: Learning Implicit Representations for Human Grasps

Conference: International Virtual Conference on 3D Vision (3DV) 2020 oral presentation & best paper

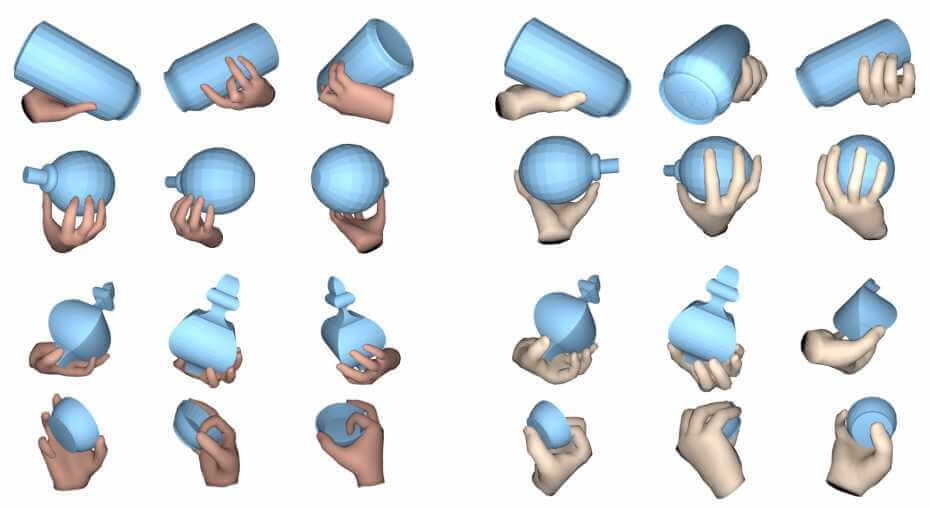

Authors:Korrawe Karunratanakul, Jinlong Yang, Yan Zhang, Michael Black, Krikamol Muandet, Siyu Tang

Capturing and synthesizing hand-object interaction is essential for understanding human behaviours, and is key to a number of applications including VR/AR, robotics and human-computer interaction.Authors:Qianli Ma, Jinlong Yang, Anurag Ranjan, Sergi Pujades, Gerard Pons-Moll, Siyu Tang, and Michael J. Black

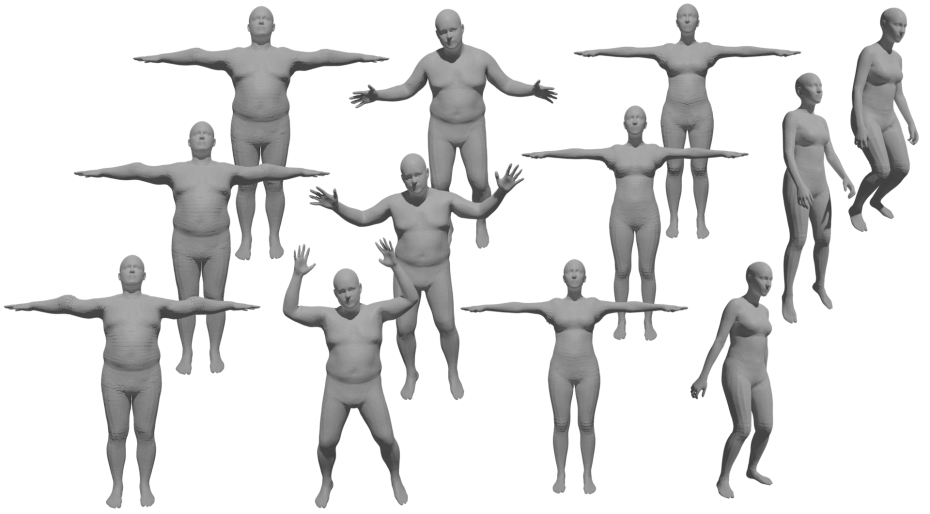

CAPE is a Graph-CNN based generative model for dressing 3D meshes of human body. It is compatible with the popular body model, SMPL, and can generalize to diverse body shapes and body poses. The CAPE Dataset provides SMPL mesh registration of 4D scans of people in clothing, along with registered scans of the ground truth body shapes under clothing.