Abstract

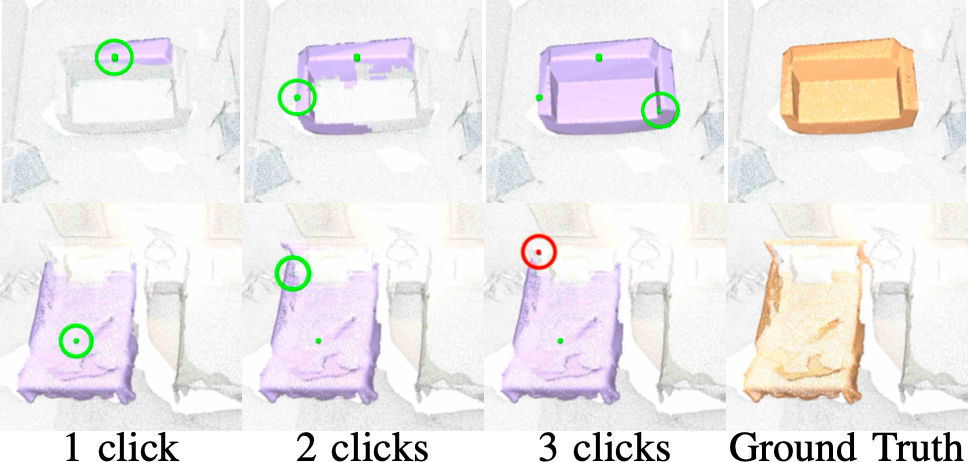

We propose an interactive approach for 3D instance segmentation, where users can iteratively collaborate with a deep learning model to segment objects in a 3D point cloud directly. Current methods for 3D instance segmentation are generally trained in a fully-supervised fashion, which requires large amounts of costly training labels, and does not generalize well to classes unseen during training. Few works have attempted to obtain 3D segmentation masks using human interactions. Existing methods rely on user feedback in the 2D image domain. As a consequence, users are required to constantly switch between 2D images and 3D representations, and custom architectures are employed to combine multiple input modalities. Therefore, integration with existing standard 3D models is not straightforward. The core idea of this work is to enable users to interact directly with 3D point clouds by clicking on desired 3D objects of interest~(or their background) to interactively segment the scene in an open-world setting. Specifically, our method does not require training data from any target domain, and can adapt to new environments where no appropriate training sets are available. Our system continuously adjusts the object segmentation based on the user feedback and achieves accurate dense 3D segmentation masks with minimal human effort (few clicks per object). Besides its potential for efficient labeling of large-scale and varied 3D datasets, our approach, where the user directly interacts with the 3D environment, enables new applications in AR/VR and human-robot interaction.